LoRA meets dropout under a unified framework

Aug 1, 2024· ,,,,·

0 min read

,,,,·

0 min read

Sheng Wang

Equal contribution

,Liheng Chen

Jiyue Jiang

Boyang Xue

Lingpeng Kong

Chuan Wu

Abstract

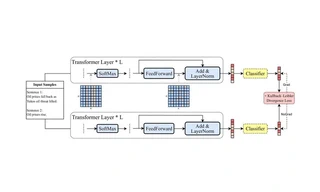

This paper presents a unified framework connecting LoRA and dropout, providing theoretical insights and practical improvements for parameter-efficient fine-tuning.

Type

Publication

In Findings of the Association for Computational Linguistics ACL 2024

Authors

Sheng Wang

(Forence)

PhD Graduate in Computer Science

Sheng Wang is a PhD graduate from The University of Hong Kong, supervised by Prof. Chuan Wu and Prof. Lingpeng Kong.

His research focuses on Agent, LLM Super-Alignment, and Data Synthesis. He has published 14+ papers in top-tier

conferences including NIPS2025 (Spotlight), ICLR2025, ACL2024/2025, EMNLP2025.

Authors

Authors

Authors

Authors

Authors