Forewarned is Forearmed: Harnessing LLMs for Data Synthesis via Failure-Induced Exploration

Jan 1, 2025·, ,,,,,,·

0 min read

,,,,,,·

0 min read

Qintong Li

Jiahui Gao

Sheng Wang

Renjie Pi

Xueliang Zhao

Chuan Wu

Xin Jiang

Zhenguo Li

Lingpeng Kong

Abstract

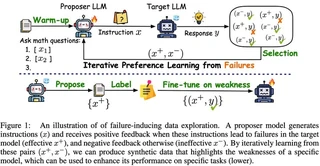

Large language models (LLMs) have significantly benefited from training on diverse, high-quality task-specific data. This paper presents ReverseGen, a novel approach designed to automatically generate effective training samples that expose the weaknesses of LLMs through failure-inducing exploration.

Type

Publication

In The Thirteenth International Conference on Learning Representations

Authors

Authors

Authors

Sheng Wang

(Forence)

PhD Graduate in Computer Science

Sheng Wang is a PhD graduate from The University of Hong Kong, supervised by Prof. Chuan Wu and Prof. Lingpeng Kong.

His research focuses on Agent, LLM Super-Alignment, and Data Synthesis. He has published 14+ papers in top-tier

conferences including NIPS2025 (Spotlight), ICLR2025, ACL2024/2025, EMNLP2025.

Authors

Authors

Authors

Authors

Authors

Authors