How Well Do LLMs Handle Cantonese? Benchmarking Cantonese Capabilities of Large Language Models

Jan 1, 2025·,, ,,,,·

0 min read

,,,,·

0 min read

Jiyue Jiang

Pengan Chen

Liheng Chen

Sheng Wang

Qinghang Bao

Lingpeng Kong

Yu Li

Chuan Wu

Abstract

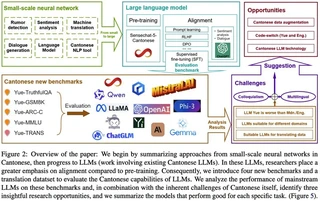

The rapid evolution of large language models (LLMs) has transformed the competitive landscape in natural language processing (NLP), particularly for English and other data-rich languages. However, underrepresented languages like Cantonese, spoken by over 85 million people, face significant development gaps. This paper introduces new benchmarks designed to evaluate LLM performance in factual generation, mathematical logic, complex reasoning, and general knowledge in Cantonese.

Type

Publication

In The 2025 Annual Conference of the Nations of the Americas Chapter of the ACL

Authors

Authors

Authors

Authors

Sheng Wang

(Forence)

PhD Graduate in Computer Science

Sheng Wang is a PhD graduate from The University of Hong Kong, supervised by Prof. Chuan Wu and Prof. Lingpeng Kong.

His research focuses on Agent, LLM Super-Alignment, and Data Synthesis. He has published 14+ papers in top-tier

conferences including NIPS2025 (Spotlight), ICLR2025, ACL2024/2025, EMNLP2025.

Authors

Authors

Authors

Authors